Provably exact artificial intelligence for nuclear and particle physics

MIT-led team uses AI and machine learning to explore fundamental forces.

The Standard Model of particle physics describes all the known elementary particles and three of the four fundamental forces governing the universe; everything except gravity. These three forces — electromagnetic, strong, and weak — govern how particles are formed, how they interact, and how the particles decay.

Studying particle and nuclear physics within this framework, however, is difficult, and relies on large-scale numerical studies. For example, many aspects of the strong force require numerically simulating the dynamics at the scale of 1/10th to 1/100th the size of a proton to answer fundamental questions about the properties of protons, neutrons, and nuclei.

“Ultimately, we are computationally limited in the study of proton and nuclear structure using lattice field theory,” says assistant professor of physics Phiala Shanahan. “There are a lot of interesting problems that we know how to address in principle, but we just don’t have enough compute, even though we run on the largest supercomputers in the world.”

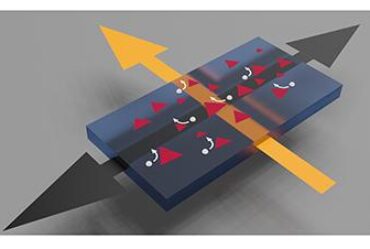

To push past these limitations, Shanahan leads a group that combines theoretical physics with machine learning models. In their paper “Equivariant flow-based sampling for lattice gauge theory,” published this month in Physical Review Letters, they show how incorporating the symmetries of physics theories into machine learning and artificial intelligence architectures can provide much faster algorithms for theoretical physics.

“We are using machine learning not to analyze large amounts of data, but to accelerate first-principles theory in a way which doesn’t compromise the rigor of the approach,” Shanahan says. “This particular work demonstrated that we can build machine learning architectures with some of the symmetries of the Standard Model of particle and nuclear physics built in, and accelerate the sampling problem we are targeting by orders of magnitude.”

Shanahan launched the project with MIT graduate student Gurtej Kanwar and with Michael Albergo, who is now at NYU. The project expanded to include Center for Theoretical Physics postdocs Daniel Hackett and Denis Boyda, NYU Professor Kyle Cranmer, and physics-savvy machine-learning scientists at Google Deep Mind, Sébastien Racanière and Danilo Jimenez Rezende.

This month’s paper is one in a series aimed at enabling studies in theoretical physics that are currently computationally intractable. “Our aim is to develop new algorithms for a key component of numerical calculations in theoretical physics,” says Kanwar. “These calculations inform us about the inner workings of the Standard Model of particle physics, our most fundamental theory of matter. Such calculations are of vital importance to compare against results from particle physics experiments, such as the Large Hadron Collider at CERN, both to constrain the model more precisely and to discover where the model breaks down and must be extended to something even more fundamental.”

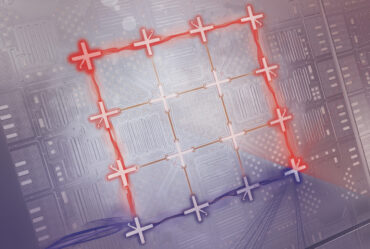

The only known systematically controllable method of studying the Standard Model of particle physics in the nonperturbative regime is based on a sampling of snapshots of quantum fluctuations in the vacuum. By measuring properties of these fluctuations, one can infer properties of the particles and collisions of interest.

This technique comes with challenges, Kanwar explains. “This sampling is expensive, and we are looking to use physics-inspired machine learning techniques to draw samples far more efficiently,” he says. “Machine learning has already made great strides on generating images, including, for example, recent work by NVIDIA to generate images of faces ‘dreamed up’ by neural networks. Thinking of these snapshots of the vacuum as images, we think it’s quite natural to turn to similar methods for our problem.”

Adds Shanahan, “In our approach to sampling these quantum snapshots, we optimize a model that takes us from a space that is easy to sample to the target space: given a trained model, sampling is then efficient since you just need to take independent samples in the easy-to-sample space, and transform them via the learned model.”

In particular, the group has introduced a framework for building machine-learning models that exactly respect a class of symmetries, called “gauge symmetries,” crucial for studying high-energy physics.

As a proof of principle, Shanahan and colleagues used their framework to train machine-learning models to simulate a theory in two dimensions, resulting in orders-of-magnitude efficiency gains over state-of-the-art techniques and more precise predictions from the theory. This paves the way for significantly accelerated research into the fundamental forces of nature using physics-informed machine learning.

The group’s first few papers as a collaboration discussed applying the machine-learning technique to a simple lattice field theory, and developed this class of approaches on compact, connected manifolds which describe the more complicated field theories of the Standard Model. Now they are working to scale the techniques to state-of-the-art calculations.

“I think we have shown over the past year that there is a lot of promise in combining physics knowledge with machine learning techniques,” says Kanwar. “We are actively thinking about how to tackle the remaining barriers in the way of performing full-scale simulations using our approach. I hope to see the first application of these methods to calculations at scale in the next couple of years. If we are able to overcome the last few obstacles, this promises to extend what we can do with limited resources, and I dream of performing calculations soon that give us novel insights into what lies beyond our best understanding of physics today.”

This idea of physics-informed machine learning is also known by the team as “ab-initio AI,” a key theme of the recently launched MIT-based National Science Foundation Institute for Artificial Intelligence and Fundamental Interactions (IAIFI), where Shanahan is research coordinator for physics theory.

Led by the Laboratory for Nuclear Science, the IAIFI is comprised of both physics and AI researchers at MIT and Harvard, Northeastern, and Tufts universities.

“Our collaboration is a great example of the spirit of IAIFI, with a team with diverse backgrounds coming together to advance AI and physics simultaneously” says Shanahan. As well as research like Shanahan’s targeting physics theory, IAIFI researchers are also working to use AI to enhance the scientific potential of various facilities, including the Large Hadron Collider and the Laser Interferometer Gravity Wave Observatory, and to advance AI itself.